It can be used to change the shape and order of a given tensor without changing its data, allowing users to manipulate their data in different ways.

The PyTorch permute method is a useful tool for rearranging the dimensions of tensor objects. The resulting tensor has the same data as the original tensor, but with the dimensions rearranged. In this example, we create a 3D tensor with shape (2, 3, 4) and then use the permute method to reorder the dimensions from (2, 3, 4) to (4, 2, 3). # print the shape of the original tensor and the permuted tensor # permute the dimensions from (2, 3, 4) to (4, 2, 3) # create a 3D tensor with shape (2, 3, 4)

The method is called on the tensor object, and takes the desired order of dimensions as an argument. Create all possible combinations of a 3D tensor along the dimension number 1.

#Permute pytorch how to#

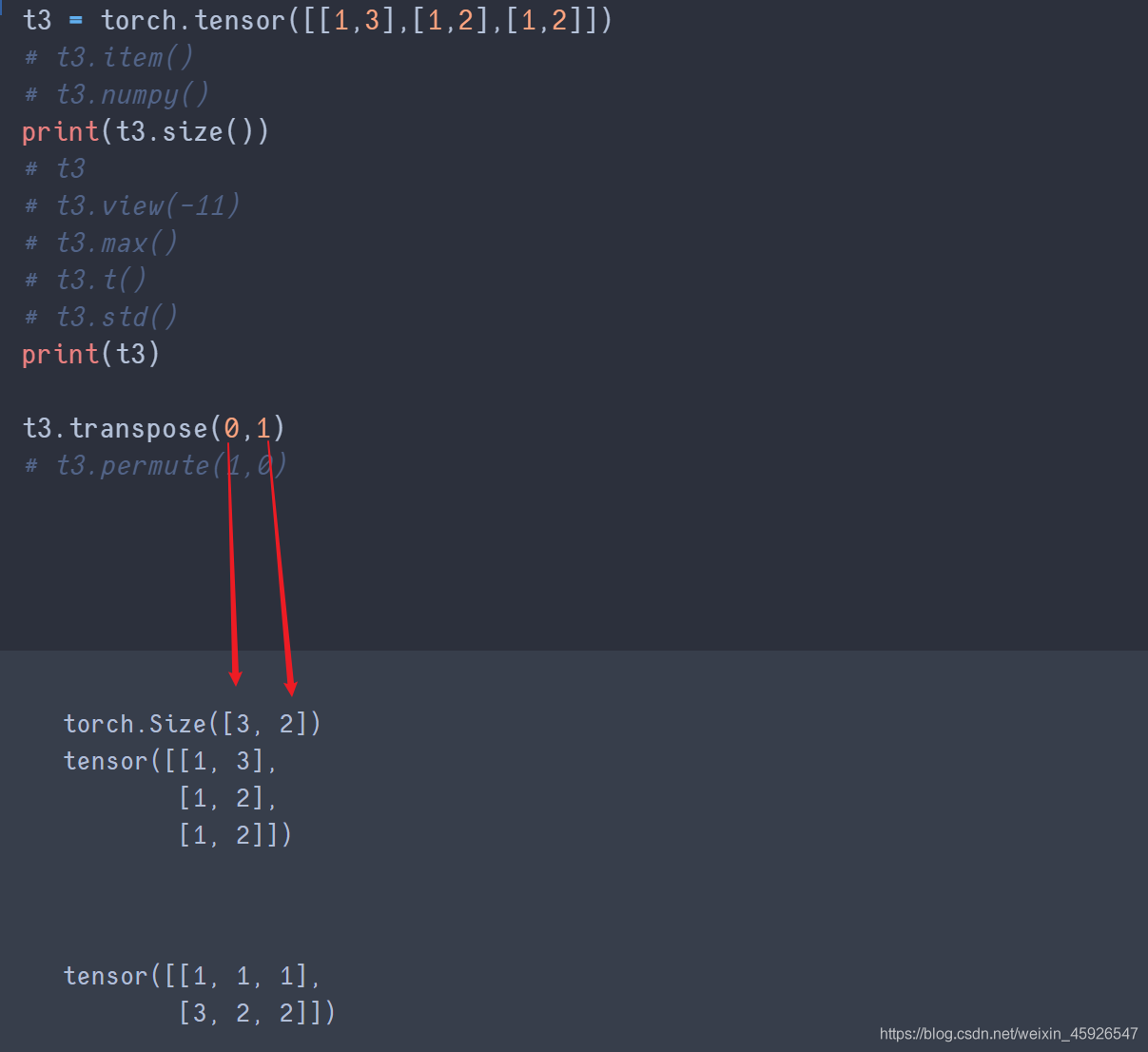

The PyTorch permute method is used to reorder the dimensions of a tensor object. Regarding how to permute vectors in PyTorch: there seems to be a function pytorch.permute(), but I can’t find any documentation for it, and when I try it doesn’t seem to work as I might expect (seems to be a no-op). The resulting tensor has all of the same data as before but now arranged according to our specified dimension ordering. To demonstrate this, we create a 3D tensor with shape (2, 3, 4) and then use the permute method to rearrange it into (4, 2, 3). It takes an argument specifying the desired order of dimensions and returns a new tensor with its data in that order. There are enough different ways of reordering dimensions, that it's hard to generalize.The PyTorch permute method is a powerful tool for reordering the dimensions of tensor objects. The transpose/swap that I did in may be hard to understand. That might be easier to see if you try to reshape In : np.arange(6).reshape(2,3)Ĭompare that with a transpose: In : np.arange(6).reshape(2,3).transpose(1,0) While reshape of (2,2,3,2) to (2,3,4) is allowed, the apparent order of values probably is not what you want. That is x.ravel() remains the same with reshape. Reshape can be used to combine 'adjacent' dimensions, but doesn't reorder the underlying data. The direct way to get there is to swap the middle 2 dimensions: In : x.transpose(0,2,1,3).shape Numpy has a stack that joins arrays on a new axis, so we can skip the last concatenate and reshape with np.stack((s1,s2)) # or Your example, using numpy methods (I don't have tensorflow installed): In : x = np.array([ Look at the difference between a.view(3,2,4) and a.permute(0,1,2) - the shape of the resulting two tensors is the same, but not the ordering of elements: In : a.view(3,2,4) However, if you permute a tensor - you change the underlying order of the elements. permute () is mainly used for the exchange of dimensions, and unlike view (), it disrupts the order of elements of tensors. When you reshape a tensor, you do not change the underlying order of the elements, only the shape of the tensor. view: a = torch.arange(24)Īs you can see the elements are ordered first by row (last dimension), then by column, and finally by the first dimension. You can easily visualize it using torch.arange followed by. the order is column major: [ first, thirdįor higher dimensional tensors, this means elements are ordered from the last dimension to the first. are in row-major ordering: [ first, second The order of the elements in memory in python, pytorch, numpy, c++ etc. Since their several possible swaps I won't try to suggest the right one.

The data order that you desired probably requires an axis transpose/swap. (4,3,2), (2,2,6), since it does not reorder underlying flattened data. Most of the time i am able to get the dimension right, but the order of the numbers are always incorrect. reshape can combine adjacent dimensions, e.g.

62, and all experiments were run on an NVidia.

0 kommentar(er)

0 kommentar(er)